41 Lecture 601

Machine Learning

41.1 Definition

“The field of machine learning is concerned with the question of how to construct computer programs that automatically improve with experience.”

Mitchell, T. (1997). Machine Learning. McGraw Hill.

41.2 Origines

- Computer Science:

- how to manually program computers to solve tasks

- Statistics:

- what conclusions can be inferred from data

- Machine Learning:

- intersection of computer science and statistics

- how to get computers to program themselves from experience plus some initial structure

- effective data capture, store, index, retrieve and merge

- computational tractability

Mitchell, T.M., 2006. The discipline of machine learning (Vol. 9). Pittsburgh, PA: Carnegie Mellon University, School of Computer Science, Machine Learning Department.

41.3 Types of machine learning

Machine learning approaches are divided into two main types

- Supervised

- training of a “predictive” model from data

- one attribute of the dataset is used to “predict” another attribute

- e.g., classification

- Unsupervised

- discovery of descriptive patterns in data

- commonly used in data mining

- e.g., clustering

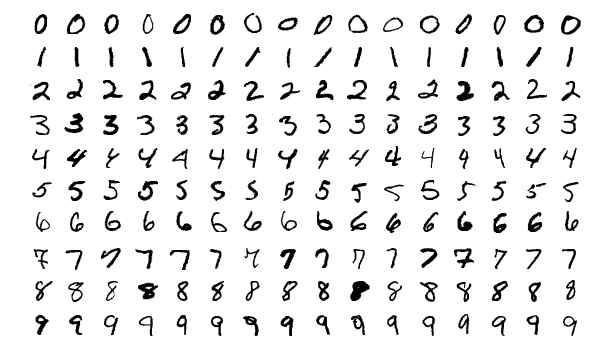

41.4 Supervised

- Training dataset

- input attribute(s)

- attribute to predict

- Testing dataset

- input attribute(s)

- attribute to predict

- Type of learning model

- Evaluation function

- evaluates difference between prediction and output in testing data

by Josef Steppan

via Wikimedia Commons,

CC-BY-SA-4.0

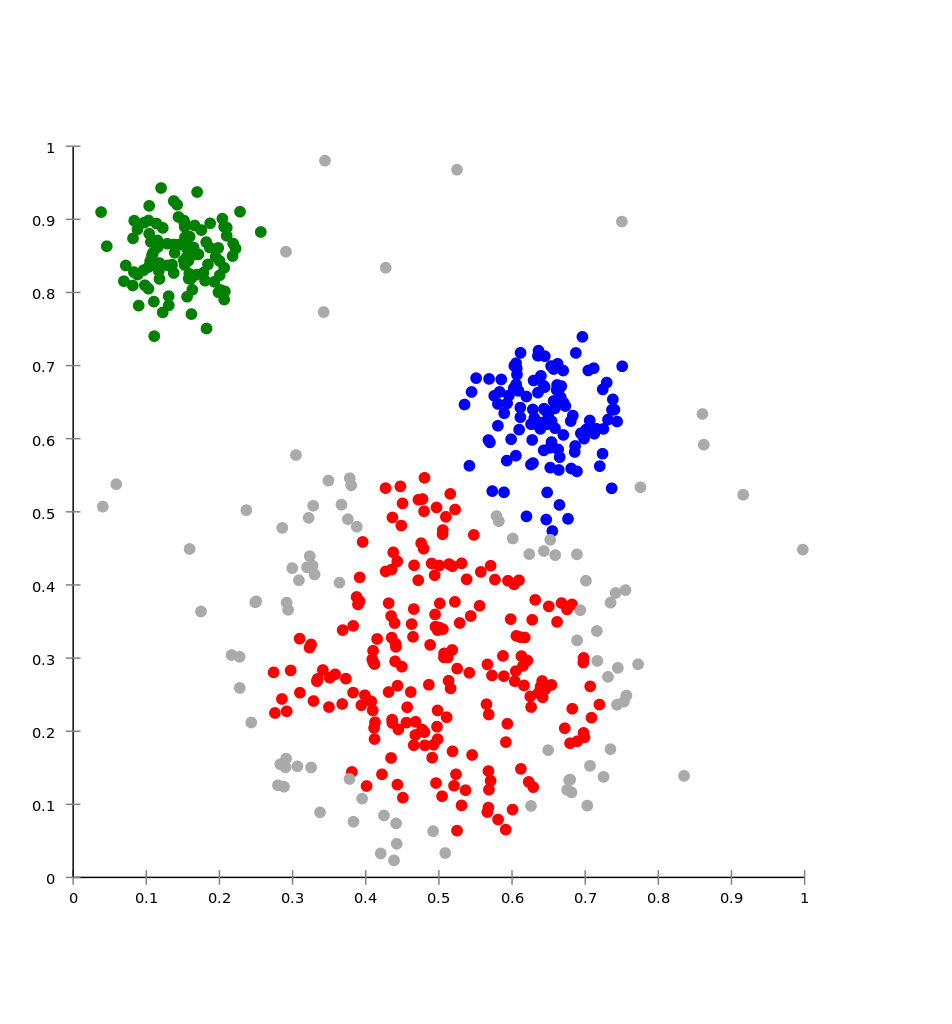

41.5 Unsupervised

- Dataset

- input attribute(s) to explore

- Type of model for the learning process

- most approaches are iterative

- e.g., hierarchical clustering

- Evaluation function

- evaluates the quality of the pattern under consideration during one iteration

by Chire

via Wikimedia Commons,

CC-BY-SA-3.0

41.6 … more

- Semi-supervised learning

- between unsupervised and supervised learning

- combines a small amount of labelled data with a larger un-labelled dataset

- continuity, cluster, and manifold (lower dimensionality) assumption

- Reinforcement learning

- training agents take actions to maximize reward

- balancing

- exploration (new paths/options)

- exploitation (of current knowledge)

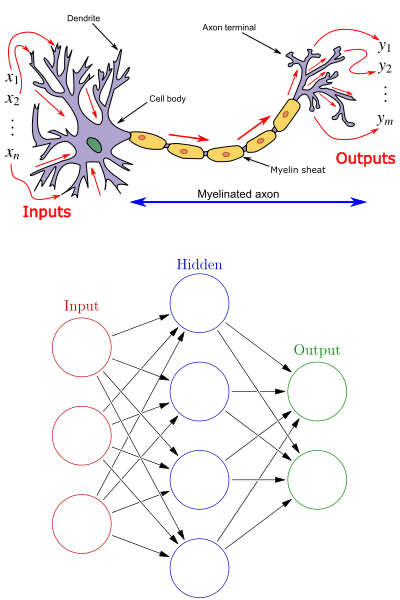

41.7 Neural networks

Supervised learning approach simulating simplistic neurons

- Classic model with 3 sets

- input neurons

- output neurons

- hidden layer

- combines input values using weights

- activation function

- The traning algorithm is used to define the best weights

by Egm4313.s12 and Glosser.ca

via Wikimedia Commons,

CC-BY-SA-3.0

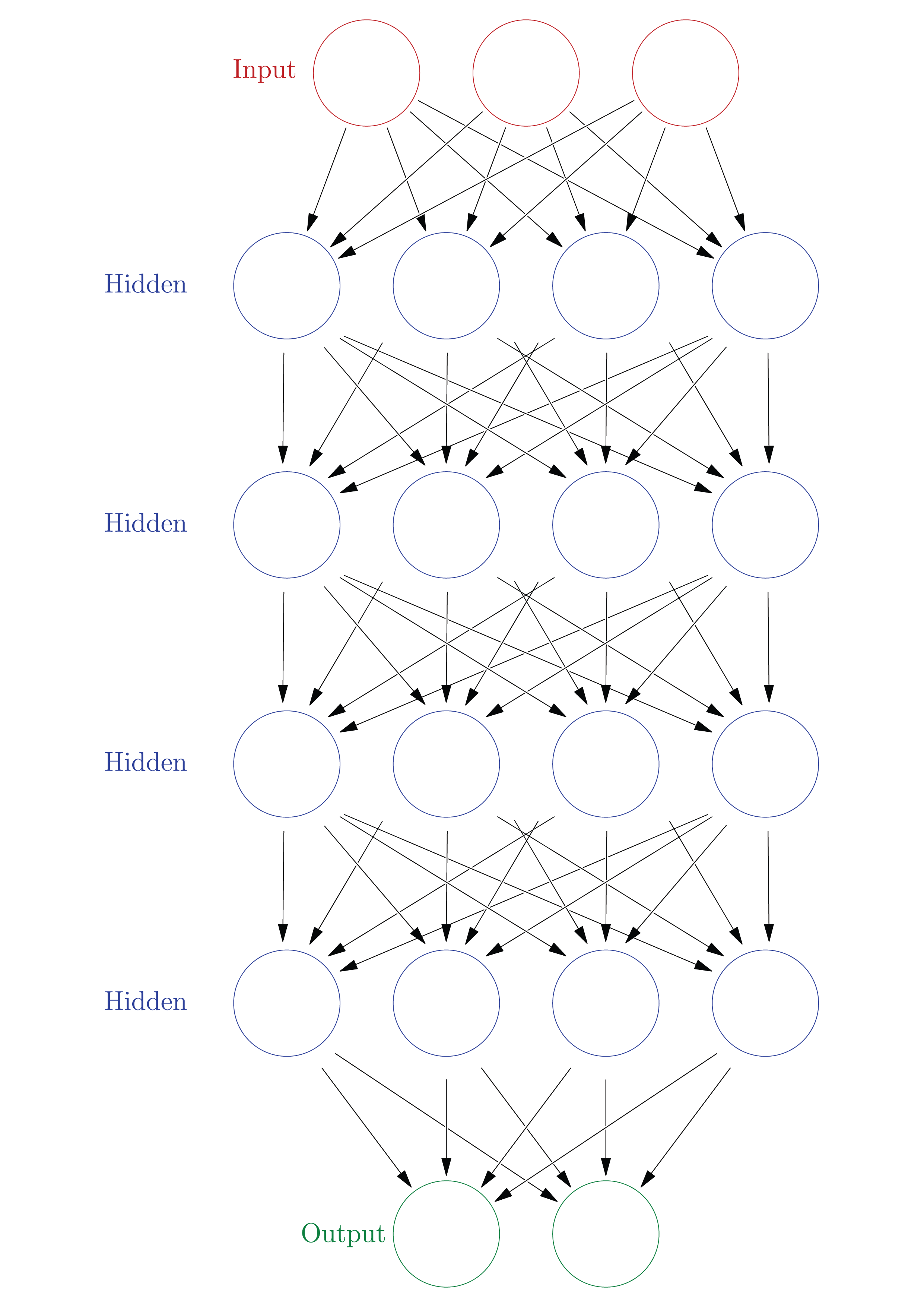

41.8 Deep neural networks

Neural networks with multiple hidden layers

The fundamental idea is that “deeper” neurons allow for the encoding of more complex characteristics

Example: De Sabbata, S. and Liu, P. (2019). Deep learning geodemographics with autoencoders and geographic convolution. In proceedings of the 22nd AGILE Conference on Geographic Information Science, Limassol, Cyprus.

derived from work by Glosser.ca

via Wikimedia Commons,

CC-BY-SA-3.0

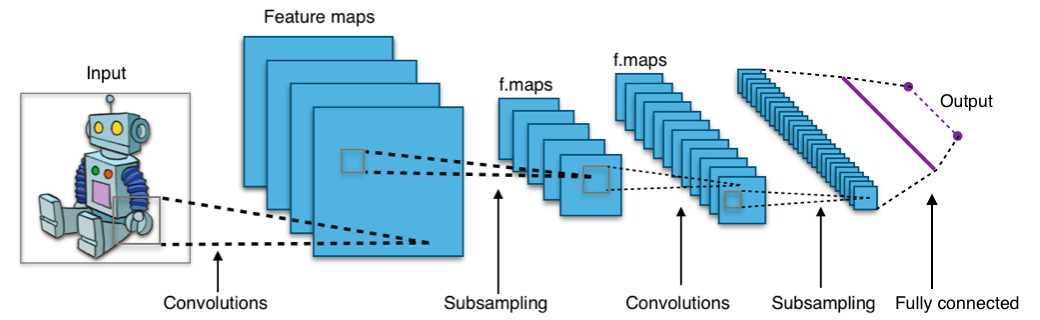

41.9 Convolutional neural networks

Deep neural networks with convolutional hidden layers

- used very successfully on image object recognition

- convolutional hidden layers “convolve” the images

- a process similar to applying smoothing filters

Example: Liu, P. and De Sabbata, S. (2019). Learning Digital Geographies through a Graph-Based Semi-supervised Approach. In proceedings of the 15th International Conference on GeoComputation, Queenstown, New Zealand.

by Aphex34 via Wikimedia Commons, CC-BY-SA-4.0

41.10 Limits

- Complexity

- Training dataset quality

- garbage in, garbage out

- e.g., Facial Recognition Is Accurate, if You’re a White Guy by Steve Lohr (New York Times, Feb. 9, 2018)

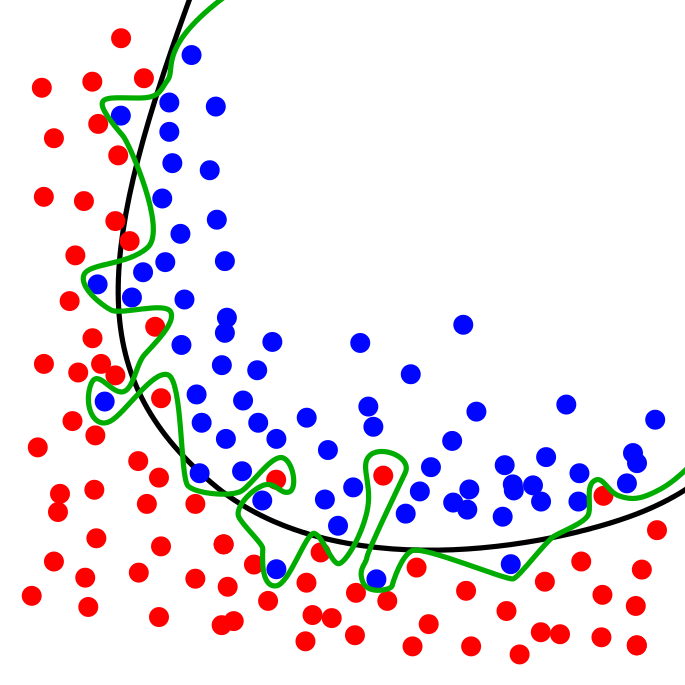

- Overfitting

- creating a model perfect for the training data, but not generic enough to be useful

by Chabacano

ia Wikimedia Commons,

CC-BY-SA-4.0